Creating a Programming Language

A high-level overview of how to go about creating your own programming language

- Jacob

- 12 min read

Introduction

Have you ever wondered how programming languages are created? Designing your own programming language may sound intimidating at first, but it is a rewarding project that will deepen your understanding of how software fundamentally works.

In this post, I’ll provide a high-level overview of what you need to consider if you want to create your own programming language. I’ll cover some key design decisions, including syntax and semantics, as well as an overview of compilers, interpreters, and transpilers. Whilst this is not a tutorial, I hope to clear up some of the mystery around language design and give you enough context to start creating your own language!

Designing the Language

When designing your language, you need to make decisions in two key categories:

- Syntax - how the language looks

- Semantics - how the language behaves

Syntax

The syntax of the language defines what code is and isn’t allowed. It breaks the language down into smaller parts (i.e. “building blocks”) that combine to form the whole language.

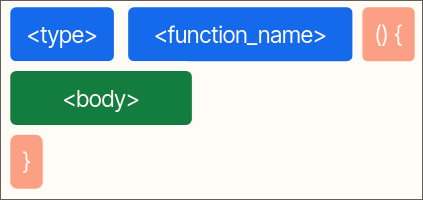

Example syntax of a function in C

The syntax is critical for understanding the code - it allows us to turn the text into a more abstract representation that we can reason about, and convert into something your computer can run.

We usually define a syntax with a grammar. This is a formal definition of the “building blocks” of the language. There are several notations for defining a grammar. I am using a notation similar to Backus-Naur Form (BNF).

In this notation, each line defines a rule. On the left of the arrow is a non-terminal - a component that is composed of other components. To the right of the arrow defines the how this non-terminal is constructed. It can be a combination of more non-terminals and/or terminals - fixed values that are not composed of anything else. Terminals are put in quotes.

There are different ways to compose terminals and non-terminals. Concatenation is defined by writing two terms next to each other. The | symbol represents “or”, meaning the value to the left or the right of it could be used. Similar to regex, * and + represent repetitions of a value, where * means 0 or more, and + means 1 or more.

Here is a grammar for a simple mathematical expression, composed of numbers, variable names (identifiers), brackets, and the four basic operations (+, -, *, /):

expression -> term ;

term -> factor (("+" | "-") factor)* ;

factor -> primary (("*" | "/") primary)* ;

primary -> number | identifier | "(" expression ")" ;

# A number is one or more digits, optionally followed by a "." and more digits

number -> digit+ ("." digit+)?

digit -> "0" | "1" | "2" | "3" | "4" | "5" | "6" | "7" | "8" | "9"

# An identifier is any string of letters, upper or lower case

identifier -> ("a" | ... | "Z")+

This grammar would accept, for example, foo + (1 + 6 * bar) / 12.03 + baz.

Semantics

Now we know how the language looks, we need to define how it will actually run. There are a lot of decisions to be made here, including:

- How memory is managed (garbage collector, reference counting, manual memory management, ownership model, etc.)

- How do types work (statically or dynamically checked, strongly or weakly typed, etc.)

- How are errors handled (error codes, exceptions, errors as values, etc.)

- How are variables passed to functions (pass by reference, pass by value, etc.)

- Many, many more

Diving into all the details and options for each of these would make this blog post really long, so I leave it up to you to research this yourself. Some useful textbooks on this are:

- Crafting Interpreters by Robert Nystrom

- Engineering a Compiler by Keith D. Cooper & Linda Torczon

- Compilers: Principles, Techniques and Tools by Alfred Aho, Monica Lam, Ravi Sethi, and Jeffrey Ullman

- Types and Programming Languages by Benjamin C. Pierce

- Programming Language Pragmatics by Michael L. Scott

Running the Code

Okay, so we have defined exactly how we want our language to look and work. How do we actually get our computer to be able to run the code?

There are 3 main approaches to running code:

- Compiling - converting the source code into something the CPU understands directly

- Interpreting - a program that reads your source code part by part and runs code to do that operation

- Transpiling - converting the source code into another language

We will come back to the details of these methods later, but first, we will look at the steps that all of these approaches share.

Compiler Overview

A common compiler structure is shown in the image below. Here, the mountain represents our compiler, where the top of the mountain represents an abstract form, and the bottom a more concrete form. The idea is to work from the source code towards a more abstract representation, where we can understand the code, then convert that back down into a form the computer can run.

An overview of the compiler pipeline

Source: Crafting Interpreters. License here

When I say “compiler” here, I am using it broadly to encompass compilers, interpreters, and transpilers.

The main steps are:

- Scanning

- Parsing

- Analysis

- Code generation (compiling, interpreting, or transpiling)

Scanning

The first step of our compiler is to take the source code and turn it into tokens. Tokens are essentially words with a bit of data attached to them.

Common data attached to tokens includes:

- A token type (e.g.

IDENTIFIER,NUMBER,PLUS,CLOSING_BRACKET, etc.) - A line (and sometimes column) number - useful for reporting errors

- The lexeme - the actual string value of the token

For example, let’s look at a line of C code:

int foo = 5;

Here, the token for foo could hold the following information:

type: "identifier"

lexeme: "foo"

line: 1

column: 4

The reason for converting our source code to tokens is that it makes the next part (parsing) easier.

Parsing

Our parser will take the tokens from the scanner, and produce an Abstract Syntax Tree (AST) using the syntax grammar. This is a tree structure that is comprised of a “root” node for the whole program, and children nodes that represent all the statements, declarations, and expressions.

For example, the syntax tree for the expression 5 + 4 * 6 may look like this:

Abstract Syntax Tree (AST) of `5 + 4 * 6`

Analysis

With our AST, we can efficiently perform any analysis of the program that we wish. This often involves checking correctness (e.g. type checking, making sure a variable exists in scope, etc.), and gathering information for future stages. This information can be essential in performing optimisations and code generation. For example, if the language is statically typed, the code generated will be dependent on the types of the variables used. Therefore, we need to work out the type of each variable to be able to generate the correct code.

Optimisations

A good compiler will also perform optimisations to the code that can help it run faster or with less memory usage. Naive code generation does not always produce optimal code. Using the information gained from prior analysis of the code, it can sometimes be made more efficient. For example, the code can sometimes be reordered to achieve better cache utilisation, without changing the result of the code. For more information, Crafting Interpreters briefly touches on optimisations, and Engineering a Compiler is often recommended for getting started with compiler optimisations. There is also a Wikipedia article for a theoretical overview.

Code Generation

Once we have analysed our AST, we can start converting it to a form that our computer can understand. As mentioned before, we have three methods of doing this. Let’s look at each one in more detail.

Compiling

When compiling, we convert our AST into machine code.

Machine code is a list of instructions, encoded in binary. Each instruction has an opcode and often one or more operands. An opcode is a binary value that tells the CPU which instruction to run. Operands are any data that the operation needs.

For an example, we can look at assembly code. Assembly code can be thought of as a human-readable form of machine code, in that each instruction in assembly is typically mapped to a single instruction in machine code. The following x86 assembly code adds the numbers 40 and 2.

mov eax, 40 ; Move `40` into register `eax`

mov ebx, 2 ; Move `2` into register `ebx`

add eax, ebx ; Add registers `eax` and `ebx` together

Here, mov and add are the opcodes. eax, ebx, 40, and 2 are the operands.

Different CPUs may have different architectures, each of which will have its own instruction set. This means that machine code varies per CPU that you are compiling for.

Once we have machine code, we wrap it in an executable format, which is different on each operating system. For example, Windows uses the .exe file format for executable files. Ignoring dynamic dependencies, these executable files are self-contained, meaning that anyone can run them without having to install any software first.

So, to generate an executable directly, we will need to convert to an executable file for each individual Operating System (OS) and architecture. This is a lot of work, therefore it is common for a language to turn the AST into an intermediate representation (IR). An IR is very similar to machine code, but it is abstracted from a specific CPU or OS. The IR compiler will handle compilation to a specific architecture and OS. For C, C++, and Rust, the IR used is LLVM. The LLVM compiler can then convert into any OS/architecture that is required.

Interpreting

Alternatively, we can have a program that runs alongside our code and executes it part by part. Note that to execute the code, the interpreter needs to be installed on the target machine first.

Here are two common approaches to interpreting:

Tree walk interpreter

- For every type of node in our AST, we have some code in the interpreter that will perform the action we expect

- For example, if our interpreter is written in C, a

printin our language would be executed by usingprintfin C - These are easy to develop, but a naive implementation is slow, and therefore this isn’t often used for real languages

Bytecode

- This is a mix between compiling and interpreting

- We convert our AST to bytecode, which is an IR that we define (or we can use an existing bytecode, such as Java bytecode)

- We create a language Virtual Machine (VM) that can read this bytecode and perform the action we expect in the VM’s language

- This is how Python and Java work

Language VMs are different from the kind of VMs that simulate a whole computer

A benefit of interpreting is that we can run our code on any machine that has our interpreter/VM installed - we do not need a separate executable file per architecture/OS.

Transpiling

Finally, transpiling is the process of taking our source code and turning it into another existing programming language. This allows us to leverage the ecosystem of the other language.

For example, TypeScript is transpiled to JavaScript, which allows it to benefit from the existing support and optimisations of JavaScript on all modern browsers.

The downside of this is that we are heavily tied to the features and ecosystem of the language that we are transpiling to (the target language). It is still possible to add new features, but they must map to features in the target language, which can restrict what is possible or lead to inefficient code.

Comparison

Here is a comparison of the different approaches:

| Topic | Compiling | Interpreting | Transpiling |

|---|---|---|---|

| What happens? | Source code is directly translated into machine code | Source code is executed by the interpreter/VM at runtime | Source code is translated into another existing language |

| Execution speed | Fastest - machine code is executed directly by the CPU | Slowest - each instruction is processed by the interpreter at runtime | Depends on the target language |

| Portability | Low - compiled binary is specific to OS and CPU architecture | High - program can run on any platform that has an interpreter for it | Depends on the target language |

| Ease of Implementation | Complex - need knowledge of machine code generation and OS-specific binary formats | Easier - AST or bytecode execution logic is simpler to build | Medium - depends on complexity of translation to target language |

| Dependency on external software | Usually none - produces self-contained executables | Interpreter or VM must be installed on the user’s system | Target language’s runtime |

| Examples | C, C++, Rust, Go | Python, JavaScript, Ruby, Lua, Java | TypeScript → JavaScript |

Conclusion

Designing a programming language may seem like a mountain to climb, but as I hope to have shown, each step is made of understandable and manageable steps. Each step builds on the last to create your very own language that works in the way you define.

Of course, we’ve only scratched the surface here. Every topic we’ve touched on opens the door to a much deeper world. There are also countless other paths to explore - type systems, memory models, tooling and more! But don’t let that overwhelm you. You don’t need to understand everything to get started - the best way to learn is simply by building.

If you’re feeling inspired, I’d highly recommend starting by following Crafting Interpreters by Robert Nystrom, which guides you through the theory and implementation of the ideas covered here, and points you to exciting next steps. Once you’ve worked through that, try designing your own language - it doesn’t have to be groundbreaking. Even the simplest language you create will be an incredibly rewarding learning experience.

Good luck, and happy language building!

Resources

- Crafting Interpreters by Robert Nystrom

- Engineering a Compiler by Keith D. Cooper & Linda Torczon

- Compilers: Principles, Techniques and Tools by Alfred Aho, Monica Lam, Ravi Sethi, and Jeffrey Ullman

- Types and Programming Languages by Benjamin C. Pierce

- Programming Language Pragmatics by Michael L. Scott

- Wikipedia article on Backus-Naur Form (BNF)

- Wikipedia article on compiler optimisation

- Wikipedia article on computer architectures